Introduction To A/B Testing: Definition, Challenges, And Best Practices

The utility of A/B testing tools to find content with the best conversion rates is well understood. Besides, the tools continue to mature, including A/B/X testing – the ability to test multiple combinations to determine the best mix. These tools also underscore additional potential for deeper analysis of site data using a data warehouse. Not always the first thought for digital marketing, but this partnership has the potential to supply analysis results that can be used across the company.

In this article, we'll define A/B testing, and discuss how to overcome common challenges by using best practices and improved techniques. We’ll then expand the discussion to address how solid digital content and proper management of your data are tied to a successful marketing strategy. We also mention how merging your data into a data warehouse is a good practice for organizations with large amounts of data to analyze (this can be done with or without ETL tools, see alooma.com for a useful overview of the ETL process). Finally, we'll touch on how you can put all of your information and tools together to achieve better results, and what you need to learn to pull it all off.

What Is A/B Testing Anyway?

A/B testing compares two different versions of a web page to see which one performs better. The version displayed to users is chosen at random—a site that uses A/B testing calculates statistics to check which page converts better.

From a marketing perspective, A/B testing takes the guesswork out of optimizing a website for desired actions (conversions). Strategic marketing decisions become data-driven, making it easier to craft an ideal marketing strategy for a website. Marketers can test different website headlines, call-to-action buttons, graphics, sales copy, and product descriptions.

A/B Testing Challenges

Some challenges of A/B testing for marketers are:

- Small sample sizes. Marketers are not statisticians—marketers want results and they want them quickly. With an A/B test, conclusive results are often called too quickly, with sample sizes for each page variation that the average statistician would scoff at. Marketers need to learn about sample sizes, in particular, how larger sample sizes lead to more accurate conclusions.

- Coming up with a hypothesis. You need to discover where the problems lie with your site and come up with a hypothesis, i.e. a proposed statement made on the basis of limited evidence that warrants further investigation to be proved or disproved by a test. Otherwise, you just end up testing random things and learning nothing. Consider the issue of very few clicks on a CTA button. A hypothesis could be something as simple as: "People aren't clicking the button because of its location. A better location on the page might work better because it'll be seen easier."

- Dealing with failed tests. When a test fails, marketers are inclined to move on to another page. Iterative testing is important, though. You should run tests, learn from them even if they fail, and establish a new hypothesis. It can take multiple follow-up tests to see real changes in conversions—don't give up on a page after one test fails to improve it.

A/B Testing Best Practices

Some best practices for implementing effective A/B tests are:

- Brainstorm — sit down with other people who work on your site (web designers, data analysts etc) and actively discuss major problems with the website, prioritizing the issues that affect user experience most and creating hypotheses based on the data supplied by analysts or online BI tools.

- Meaningful metrics—always use meaningful metrics that provide actionable information based on the results of your A/B tests.

- Challenge the status quo—test different values that challenge your pre-conceived ideas of what drives conversions. Consider CTA button copy; instead of testing “join” versus “sign up”, test something more creative or personal.

How to learn from your A/B tests

Consider multiple metrics related to areas beyond simply improved conversions. You need to consider the effects of each variation on SEO too and get the “big picture”.

Enhance the results of A/B tests by capturing extra data that reveals insights on user behavior, such as heatmaps, scroll maps, or visitor recordings. An A/B test on a navigation bar, for example, is only worthwhile if people actually use the navigation bar. Tracking user behavior can provide more revealing insights than the A/B test alone.

Build a knowledge repository that documents each A/B test and its results, including user behavior data. You can refer to your knowledge repository when coming up with hypotheses for future tests.

Digital Content And Conversion

As a digital marketer, you understandably worry about conversion rates. The prime purpose of A/B testing tools is to provide content comparison, ensuring that the pages with the highest anticipated conversion rates are on your site. These tools allow analysts to more easily determine which content is most effective, without beta testing on site visitors.

Today, content is everything. Sites must have a lot of high quality content and it needs to be refreshed on a regular basis. High conversion rates also require that your customers keep coming back, and new content is one way to keep them interested. Videos, blogs, white papers, webinars and new products are all part of a company’s web presence today.

This variety of content makes A/B testing more important and more difficult. The time spent to create a professional video is wasted if the page sits by the wayside or if visitors view the video and then click off.

A Data Warehouse Gold Mine

Do not let all that data gather dust. Adding your analytics and metrics into a data warehouse gives you a wealth of information you can analyze in many ways. Imports from a database are simple and places all of that data in one location. Work with your information technology staff and business analysts to plan how this data will be included in your data warehouse. Perhaps a segmented data mart storing only your site data fits your needs.

This does not have to be complex, or expensive. There are many tools and services to help you build a data repository if your business is not already using a data warehouse. Current databases can be integrated into a data warehouse, for example, thanks to a MySQL data connector. The value of a repository to your business intelligence efforts is exponential. Research the options and you will find one that suits your needs and budget.

Putting it all together

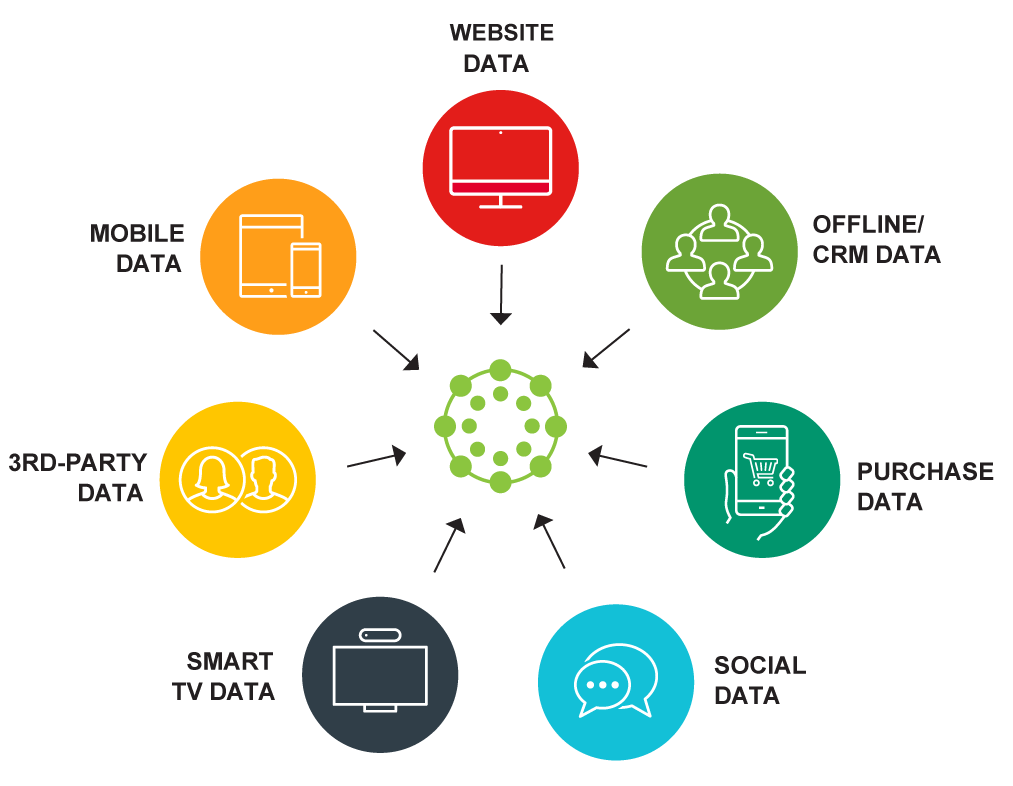

Now that you can access information about your web presence from a variety of angles – forecasts, testing results, metrics, history – your analytics work can truly support your business goals. Your site does not exist as a silo, and combining web analytics with other business data allows for unprecedented insight. What to do with all that data?

Now that you can access information about your web presence from a variety of angles – forecasts, testing results, metrics, history – your analytics work can truly support your business goals. Your site does not exist as a silo, and combining web analytics with other business data allows for unprecedented insight. What to do with all that data?

Integrating A/B testing results with actual site statistics and web analytics provides the ability for what-if scenarios, for confirming that your A/B testing tool is producing accurate results when compared to site traffic history. Discover if that new content is attracting new customers. Or use a combination of data analysis and A/B testing to forecast sales in a new market.

One way to combine the data can be a Customer Resource Management (CRM) system, used to analyze the direct effect of your web efforts on customers and sales. Such a holistic view is necessary to stay competitive in a global market.

Understanding Where To Begin

To approach your online marketing strategy with a holistic view requires a set of complex tools and technologies, all of which can help you obtain deeper knowledge of customer data, answer burning business questions, and improve conversion rates. But before you begin to adopt new technologies, you might need to take a step back and re-familiarize yourself with the web analytics and BI space, identify the latest business intelligence trends, and map out the connections between the different concepts and technologies.

The team at CoolaData – maker of an end-to-end big data behavioral analytics platform – took on a project to make the available content about web analytics and BI for digital marketing more accessible. They built the Web Analytics & BI Wiki, the first knowledge hub that collects all the relevant information on the subject and organizes it in a meaningful structure.

A central realization behind the wiki is that there is a huge knowledge gap between the average marketing professional, web analyst and the seasoned big data pro. So they set out to build a comprehensive resource that brings together thousands of expert opinions, how-to guides, case studies and real-life examples – written by bloggers, analytics experts, industry analysts and even competitors – in the intersection between marketing, web analytics, BI and data science.

About the author